Shayne Longpre

AI Research Scientist

MIT

About me

I am a PhD Candidate at MIT. My research focuses on the intersection of AI and it’s impact on the world: I develop data-centric methods to build AI systems, and turn the lens around to expose AI’s impact on the web, the economy, and people. I lead the Data Provenance Initiative, led the Open Letter on A Safe Harbor for Independent AI Evaluation & Red Teaming, and have contributed to training models like Bloom, Aya, and Flan-T5/PaLM. I’m thankful for the recognition my research has received: Best Paper Awards from ACL 2024, NAACL 2024, as well as coverage by the NYT, Washington Post, Atlantic, 404 Media, Vox, and MIT Tech Review.

Previously, I conducted AI research at Google, Apple, Salesforce Research, and Stanford, where I received my MS in Computer Science, and BA in Economics, while competing as a rower, and exploring a passion for creative writing.

I am on the faculty and industry job markets, looking for the right role. I would be grateful to hear of relevant positions. See my full CV here, and full list of publications here.

Recent News

2025.10: My new paper on Open Model Concentration of Power was released. It was featured by the Financial Times.

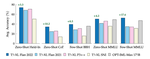

2025.09: My new paper on Multilingual Scaling and Mixing Laws was released.

2025.05: Our research on the Leaderboard Illusion was accepted at NeurIPS 2025 and covered by TechCrunch.

2025.04: Delivered three keynotes at ICLR 2025 workshops: SCI-FM, Building Trust in LLMs, and MLDPR.

2025.03: Our new position paper on Third-Party AI Flaw Disclosure and blog are released, covered by Wired.

2025.01: Published my Op-Ed on AI Data Crawler Wars to the MIT Technology Review.